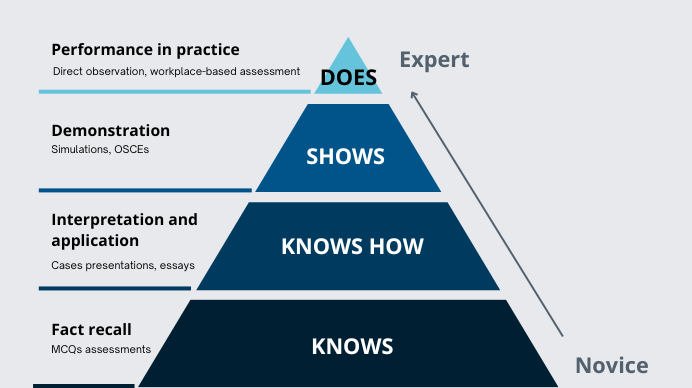

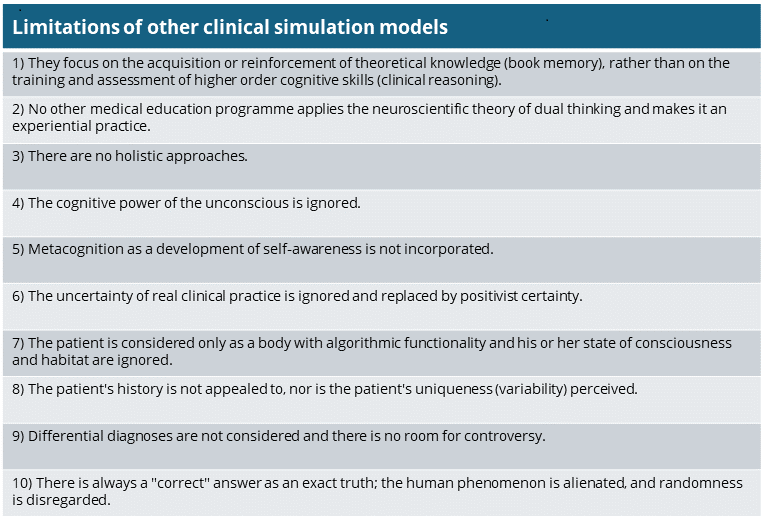

Numerous clinical simulation models exist to help students reinforce theoretical knowledge, but they do not train and assess the higher-order thinking skills needed for decision-making in real clinical practice (especially in uncertain situations). In terms of Miller's pyramid, which represents the most recurrent scheme in the field of medical education and professional competence, most methodologies barely cover the lowest level "knowing", whereas the clinical reasoning simulator Practicum Script covers also "knowing how" and even "showing how", promoting meaningful and contextual learning.

Madrid, 11th July 2024. The current challenge of medical training plans for the assessment of real clinical competence lies at the apex of the pyramid conceptualised by the American psychologist Miller G. [1] in 1990: the "does-action". To get there, one must first reach the states of knows-knowledge, knows how-competence and shows how-performance . On the way, Shumway J.M. and Harden R. M. [2] consider that it is necessary to assess what students do in practice and how they apply their knowledge of basic and clinical sciences to patient care. Brailovsky C.A, [3] goes further and points to the relevance of reflective skills and reasoning in clinical problem solving.

There is no doubt that clinical reasoning skills are essential for clinical decision-making. However, current assessment methods are limited in testing clinical thinking and uncertainty management. It is easiest to point to textbook memorisation to validate acquired knowledge and this is where most of the available e-learning solutions are anchored, presenting academic exercises, useful for memorising theoretical content and passing exams but not conducive to meaningful learning. This is where an ethical dilemma arises in medical education: should medical schools prepare the student for an exam or for treating a patient?

Practicum Script takes a quantum leap in medical pedagogy by embracing reflective practice in high-fidelity simulated environments and moving away from approaches that urge students to select a single solution around ideally defined algorithmic clinical problems. Such approaches, as Cooke S. (4) suggested, lack authenticity and can create a misleading impression of certainty. Practicum Script delves into the complexity and uniqueness of each patient and poses challenges that encourage clinical judgement. It seeks to strengthen students' ability to integrate and apply different types of knowledge, as well as to understand and weigh different plausible perspectives.

Hornos E. emphasises [5] that the Practicum Script methodology is the only one based on the dual thought process theory of cognitive psychology and neuroscience, which is the most widely accepted theory to explain human cognition. The programme integrates a probabilistic approach to two-step decision making and allows the student to reproduce the conscious and unconscious processes of reasoning faced with a real patient. In addition, one of the main distinguishing features of Practicum Script is the type and purpose of the questions asked. Multiple choice questionnaires (MCQs), which are commonly used by most platforms, present a list of possible answers for learners to select the most appropriate one. This, as Harden R. M. [6] points out, implies a possible "recognition effect" and ignores intuitive reasoning.

Practicum Script addresses the whole process of the reasoning task, with real clinical cases and special emphasis on heuristics. Thus, the first challenge students face is to generate up to 3 hypotheses free text format (system 1, heuristic reasoning), without being offered possible answer options or suggesters of terms. They must then argue their hypotheses, identifying key patient data in favour and against their hypotheses, in order to foster their critical judgement. These steps are complemented by five clinical scenarios in which the student must decide on the impact of new information on the initial hypotheses (system 2, analytical reasoning) and an inductive reasoning step for the student to draw conclusions from re-examining the situation (inductive reasoning) and confronting their decisions with those of a panel of experts.

On the other hand, the rest of the platforms tend to limit their feedback to correct or incorrect answers, to give medical information about the topic or the disease presented in the case and to provide certain advice to reach a certain conclusion. In contrast, in Practicum Script the student receives personalised feedback, based on experience-based medicine and evidence-based medicine, which he/she can transfer to daily practice, and which fosters his/her autonomy and self-confidence to make decisions.

Quality and identity are established by difference. Practicum Script presents cases with a comprehensive clinical history and not just short vignettes designed to give predefined answers. Moreover, the cases are real and are set in contexts of complexity and uncertainty, always in line with the formative stage of the clinical cycle in question. This complexity, which does not refer to the level of difficulty is irreducible and is increasingly present in acute and chronic patients. So, what is the difference between Practicum Script and other clinical simulation models? Authenticity and the encouragement of higher-level thinking, as well as the teaching quality of a team of 30 to 40 medical experts (comprising an editorial board from leading universities, an international group of validators and a group of local auditors).

Our model is on the pre-practice in vivo rung of Miller's pyramid and overcomes the limitations of traditional approaches, which focus on theoretical knowledge. Besides it adresses clinical complexity and corrects cognitive failures, which are responsible for 75% of medical errors. The psychometrics of a recent study by Hornos E. [7] reveal that it does so with a high level of reliability and validity.

The SCT: popular, but questioned

In the same vein, although the Script Concordance Test (SCT) is the best-known methodology for assessing clinical reasoning, it has been widely criticised for its unreliable structure for measuring what it claims to measure and for its psychometric inconsistencies. Our own research [8] on the classical SCT has corroborated these difficulties and demonstrated validity problems in the way the SCT assumes expert responses as a reference standard for participants' notation.

Moreover, by excluding hypothesis generation, SCT amputates clinical reasoning. However, in Practicum Script the incorporation of heuristics, demanded by authors such as Feufel M.A. [9], puts the student on the track of his/her own suspicions, which he/she will then have to confirm or rule out on a Bayesian probability scale.

Finally, Kreiter C.D. [10] and many others point out that the notation of the SCT is highly questionable because of its aggregate scoring system and the lack of verification of the experts' answers with scientific evidence. In contrast, with Practicum Script, participants can compare their answers with those of experts without necessarily being scored as right or wrong, in order to help them understand and weigh different plausible perspectives. When there is controversy among the expert panellists, a Delphi method is used, and the value of informed divergence of opinion is highlighted. In addition, all expert responses are substantiated with literature that supports agreements and disagreements.

For Lineberry M. [8], " Even if a participant does not change the direction of their belief on a given situation, knowing the controversy, they might adjust their certainty and apply more divergent thinking going forward.” For the learner starting to have their first contacts with clinical reality, this helps to open their mind to differential diagnoses (and management alternatives), avoiding one of the main causes of medical error: premature diagnostic closure.

And what about AI?

Artificial intelligence (AI) has a huge potential, still under definition, to support medical education and even the care physicians can provide. In our case, for example, OCMALE, an acronym for Octopoda Machine Learning®, is a machine learning system for the automatic recognition of plausible hypotheses, with a high effectiveness in giving immediate feedback to participants in three languages (English, Spanish and Portuguese).

However, phenomenologically, all human beings are different and, consequently, randomness is unpredictable and uniqueness infinite. In controlled tests, AI has not proven capable of replacing human cognition and professional expertise to effectively measure clinical reasoning in contexts of uncertainty. For example, when trying to solve Practicum Script clinical cases with GPT-4o chat, the chatbot often fails in hypothesis generation because it formulates hypotheses based on statistics and clinical practice guidelines; on the other hand, AI-constructed clinical scenarios lack uncertainty and can be solved solely through the mobilisation of theoretical knowledge, slanting clinical reasoning.

Let's look at an example: a 68-year-old woman with atrial fibrillation with high ventricular rate and signs of dehydration secondary to vomiting accompanied by poor fluid intake, without manifestations of heart failure and with low blood pressure. Here chat GPT proposed one correct hypothesis (digoxin) and two invalid hypotheses (beta-blockers and calcium channel blockers), without considering that, in the particular context of this patient, the use of the latter two drugs carries a significant risk of aggravating arterial hypotension. On the other hand, it failed to recognise that the administration of intravenous fluids was the most reasonable therapeutic option in a situation where the cardiac arrhythmia was probably caused by dehydration.

References:

1. Miller G. The assessment of clinical skills/competence/performance. Acad Med 1990; 65: S63-7.

2. Shumway JM, Harden RM. AMEE Guide no. 25: The assessment of learning outcomes for the competent and reflective physician. Med Teach 2003; 25: 569-84.

3. Brailovsky CA. Educación médica, evaluación de las competencias. OPS/OMS, eds. Aportes para un cambio curricular en Argentina. Buenos Aires: University Press; 2001. p. 103-20.

4. Cooke S, Lemay JF. Transforming Medical Assessment: Integrating Uncertainty Into the Evaluation of Clinical Reasoning in Medical Education. Acad Med. 2017 Jun;92(6):746-751.

5. Hornos E, Pleguezuelos EM, Bala L, van der Vleuten C, Sam AH. Online clinical reasoning simulator for medical students grounded on dual-process theory. Med Educ. 2024 May;58(5):580-581.

6. Harden RM. Harden’s blog: News from Roanoke, MCQs are dead, Learning analytics and ethical issues, Goodhart’s law and citation numbers, and Inspiring students (or trying to). URL: https://www.mededworld.org/hardens-blog/reflectionitems/July-2019/HARDEN-S-BLOG-News-from-Roanoke-- MCQs-are-dead--Le.aspx. [05.07.2019].

7. Hornos E, Pleguezuelos E, Bala L, Collares CF, Freeman A, van der Vleuten C, Murphy KG, Sam AH. Reliability, validity and acceptability of an online clinical reasoning simulator for medical students: An international pilot. Med Teach. 2024 Mar 15:1-8.

8. Lineberry M, Hornos E, Pleguezuelos E, Mella J, Brailovsky C, Bordage G. Experts' responses in script concordance tests: a response process validity investigation. Med Educ. 2019 Jul;53(7):710-722.

9. Feufel MA, Flach JM. Medical education should teach heuristics rather than train them away. Med Educ. 2019 Apr;53(4):334-344.

10. Kreiter CD. Commentary: The response process validity of a script concordance test item. Adv Health Sci Educ Theory Pract. 2012 Mar;17(1):7-9.

Our personalized help center enables you to obtain technical support and help for navigating through the site and using the program.